Sign Language to Text using Deep Learning

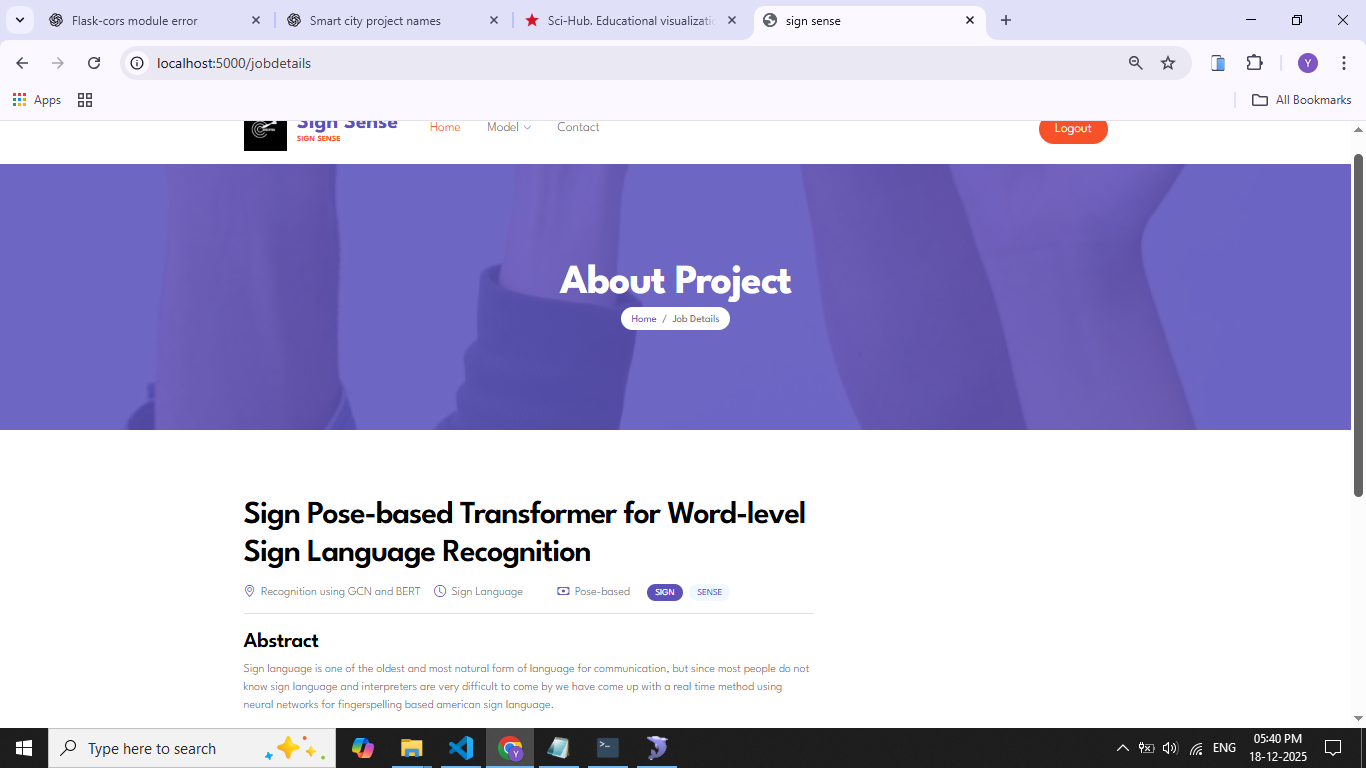

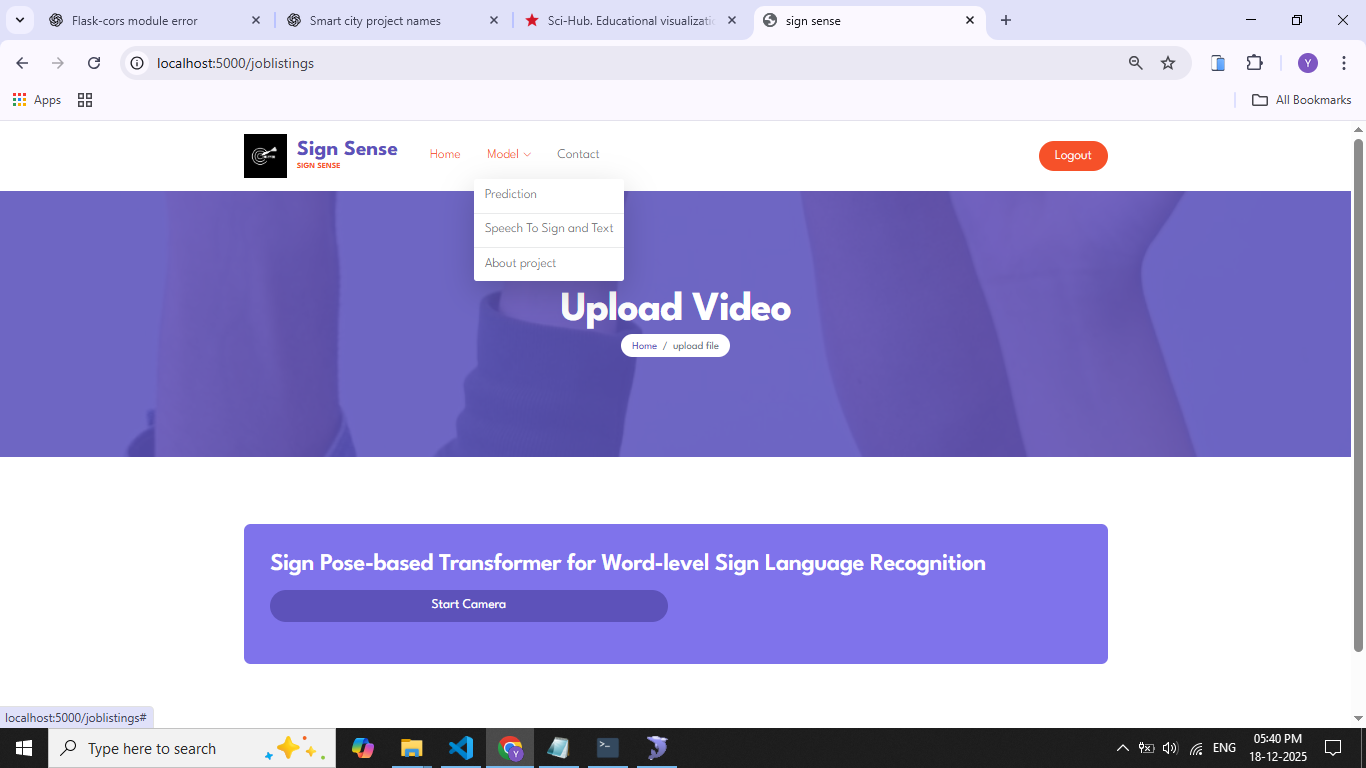

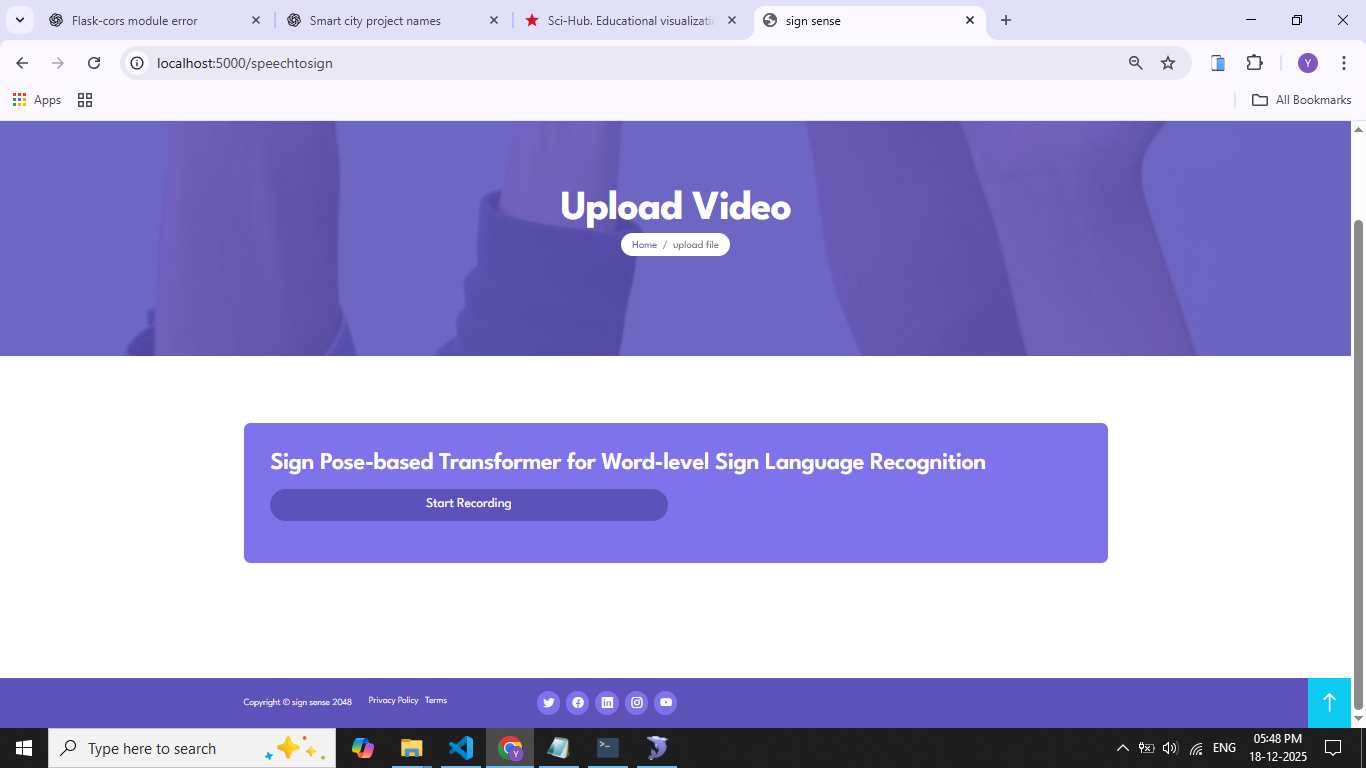

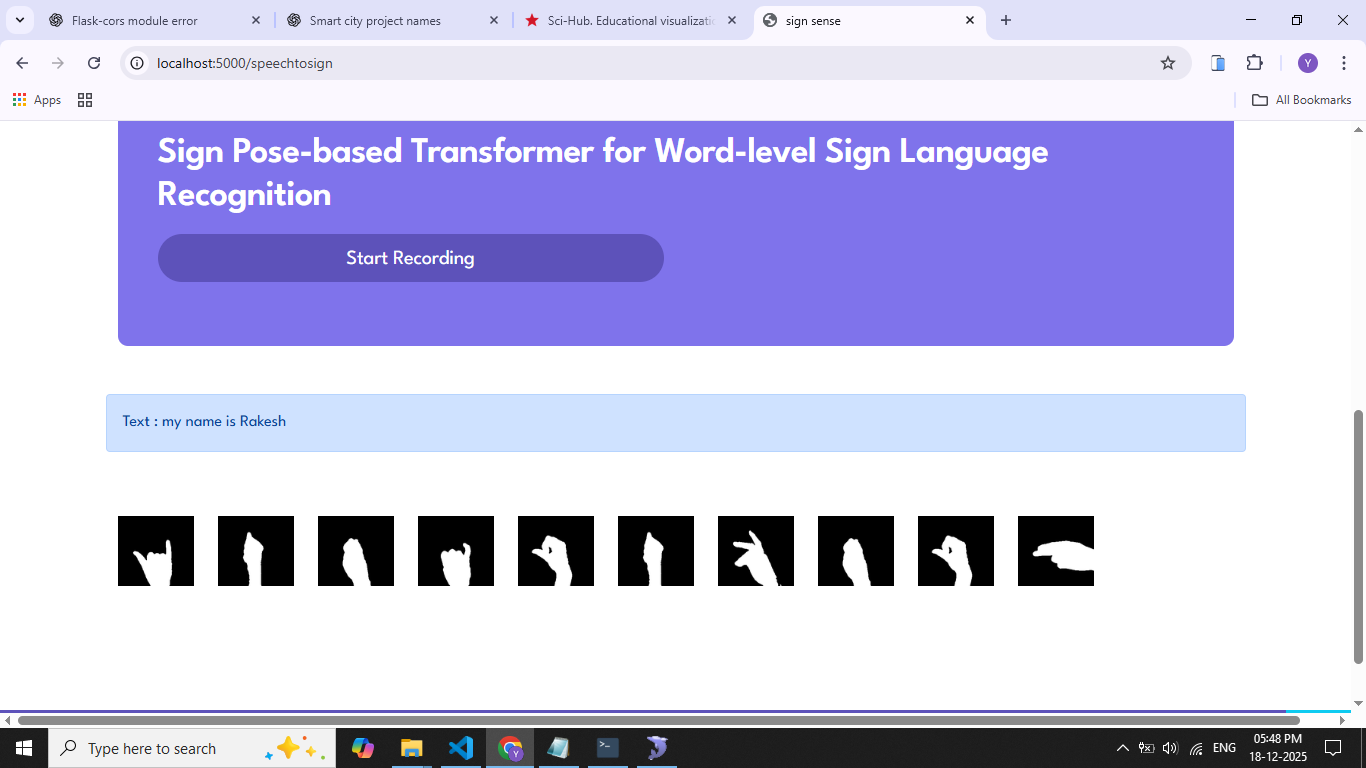

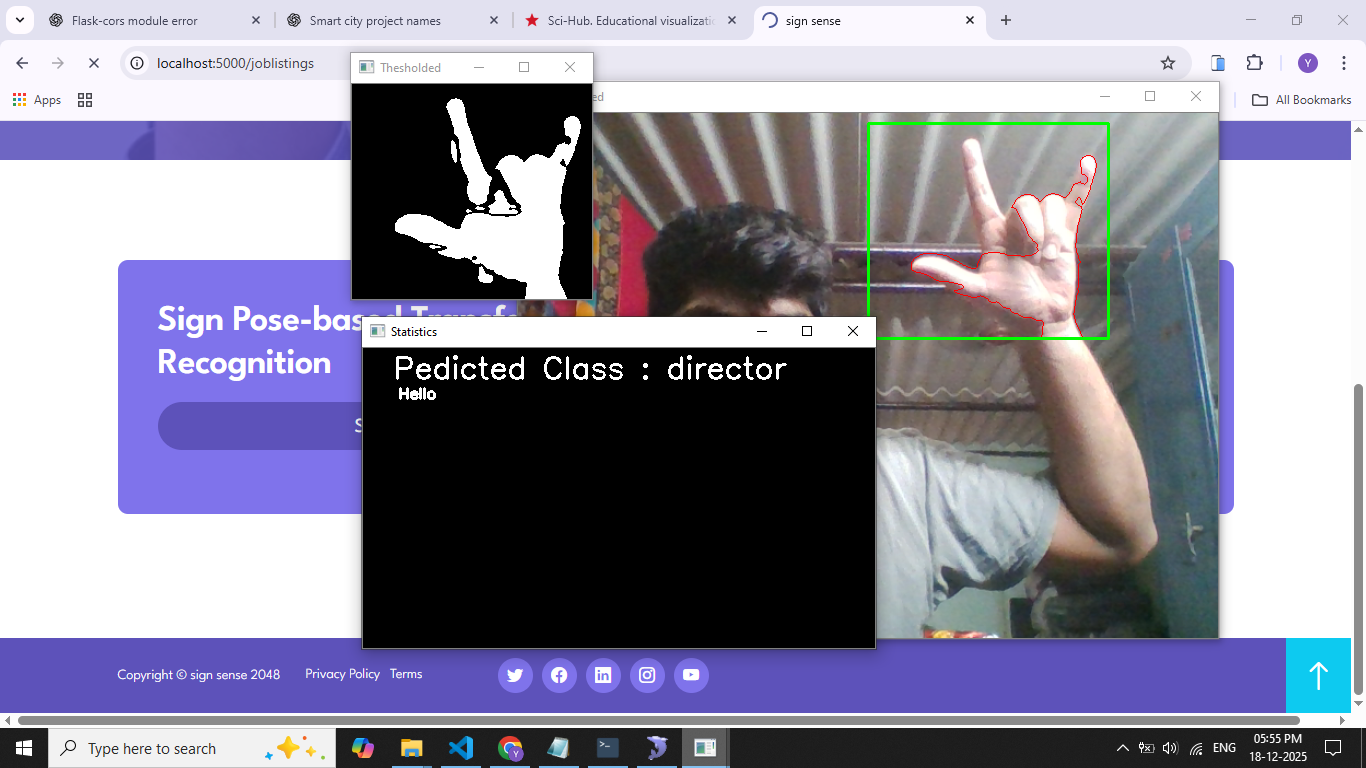

Artificial IntelligenceSign Language to Text Conversion using Deep Learning is a system designed to bridge the communication gap between hearing-impaired individuals and the general population. Sign language is a primary mode of communication for people with speech and hearing disabilities, but most people do not understand it, creating barriers in daily interactions. This project aims to automatically recognize sign language gestures and convert them into readable text using deep learning techniques. The proposed system uses a camera to capture hand gestures and movements in real time. The captured frames are preprocessed to remove noise, normalize lighting, and extract relevant features such as hand shape, orientation, and motion. Deep learning models, particularly Convolutional Neural Networks (CNNs) and Long Short-Term Memory (LSTM) networks, are employed to learn spatial and temporal patterns from sign language data. CNNs handle image-based feature extraction, while LSTMs model sequential gesture information. The trained model classifies gestures and translates them into corresponding text output displayed on the screen. This system improves accessibility, enables inclusive communication, and can be extended for real-time applications such as education, customer service, and public assistance.

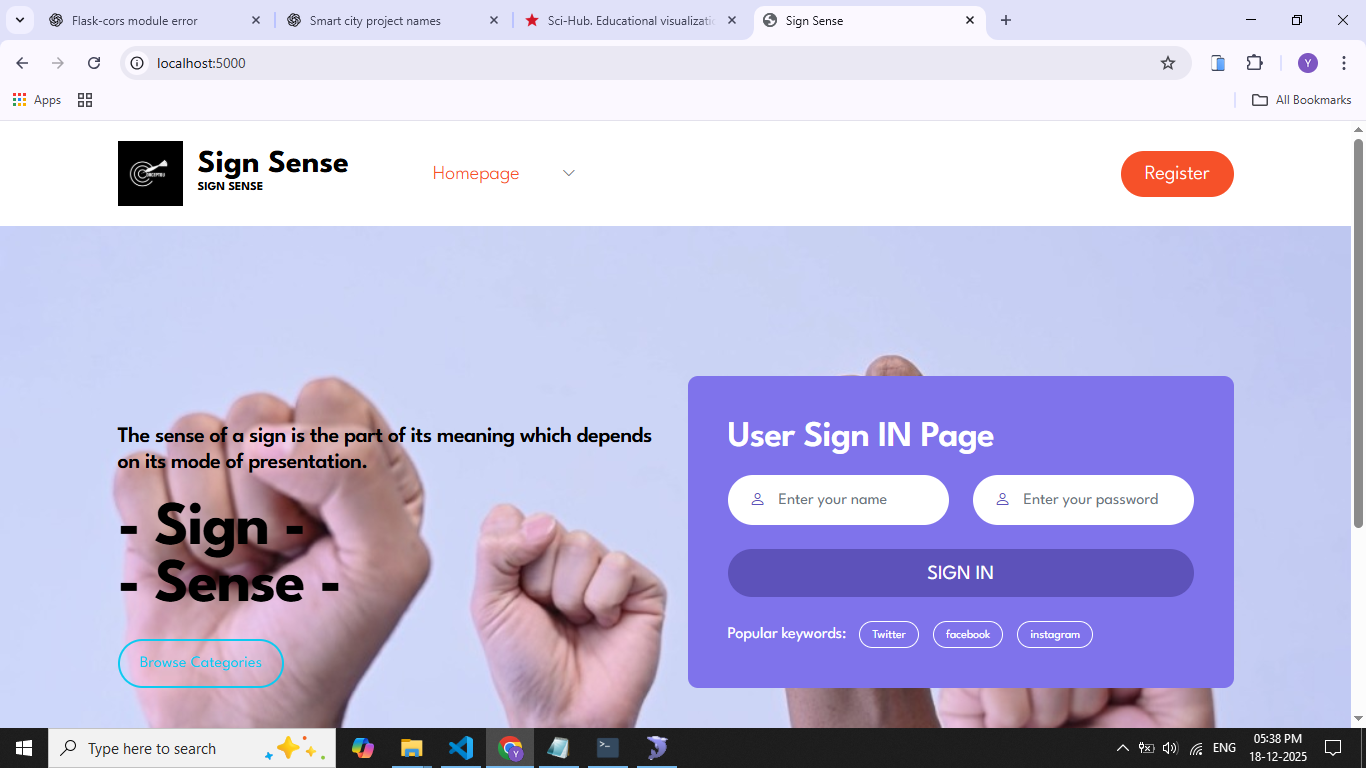

• Modern and responsive design

• Clean and maintainable code

• Full documentation included

• Ready to deploy